Chris Gaskett

Papers Index

- Patent: 視線座標変換方法、それのプログラムおよびそれを記録した記録媒体

- Is Optimal Foraging a Realistic Expectation in Orb-web Spiders?

- Creativity in the Cane Fields: Motivating and Engaging IT Students Through Games

- Foveated Vision Systems with Two Cameras per Eye

- Hand-Eye Coordination through Endpoint Closed-Loop and Learned Endpoint Open-Loop Visual Servo Control

- Support Vector Machines and Gabor Kernels for Object Recognition on a Humanoid With Active Foveated Vision

- Online Learning of a Motor Map for Humanoid Robot Reaching

- Reinforcement Learning Under Circumstances Beyond its Control

- Q-Learning for Robot Control

- Learning Implicit Models during Target Pursuit

- Reinforcement Learning for a Vision Based Mobile Robot

- Reinforcement Learning for Visual Servoing of a Mobile Robot

- Q-Learning in Continuous State and Action Spaces

- Autonomous Control and Guidance for an Underwater Robotic Vehicle

- Reinforcement Learning for a Visually-Guided Autonomous Underwater Vehicle

- Reinforcement Learning applied to the control of an Autonomous Underwater Vehicle

- Development of a Visually-Guided Autonomous Underwater Vehicle

Most of my research has been on learning systems for vision-based robotics. After moving to JCU I did some

biological modelling work

.

Most of my research has been on learning systems for vision-based robotics. After moving to JCU I did some

biological modelling work

.

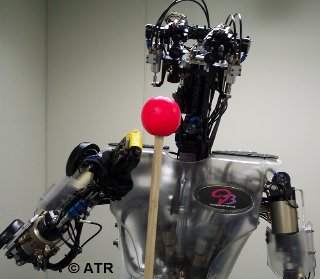

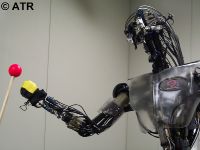

I developed a vision-based, learning control system for a humanoid robot at ATR International in Kyoto Japan. The software is written in Scheme and runs under PLT's DrScheme (previous versions under vx-works used Colin Smith's vx-scheme).

At Toyota Central Research and Development Laboratory (Toyota CRDL, Inc.) in Nagoya I worked on stereo vision, and processing of data from seeingmachines FaceLab (see patent below).

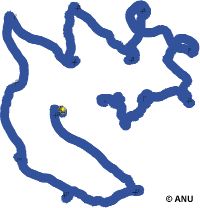

I completed my PhD at the Robotic Systems Laboratory, part of the Research School of Information Sciences and Engineering at the Australian National University. I developed a learning control system that uses Q-learning with continuous states and actions. This system was applied to learning visual servoing and vision-based wandering on a real Nomad 200 mobile robot and control of the HyDrA active head. I also worked on the Submersible Robot (Kambara) Project. I designed most of the electronics for this vehicle including 720W amplifiers, power supply, power distribution and leak detection.

My undergraduate degrees were completed at the Royal Melbourne Institute of Technology University (RMIT University): Bachelor of Engineering (Computer Systems) with First Class Honours, and Bachelor of Applied Science (Computer Science).

Patent: 視線座標変換方法、それのプログラムおよびそれを記録した記録媒体

(Method of transforming and recording gaze coordinates)Chris Gaskett

Japanese patent application 2002-38390, granted 2003-241892. Rights owned by Toyota Central Research and Development Laboratory.

Is Optimal Foraging a Realistic Expectation in Orb-web Spiders?

Will Edwards, Poppy A. Whytlaw, Bradley C. Congdon, and Chris GaskettEcological Entomology, Vol 34, 2009, pp 527-534

1. Explanations for web relocation invoking optimal foraging require reliable differentiation between individual sites and overall habitat quality. We characterised natural conditions of resource variability over 20 days in artificial webs of the orb-web spider Gasteracantha fornicata to examine this requirement. 2. Variability in catch success was high. Day-to-day catch success in 90% (18/20) catch sites fitted negative binomial distributions, whereas 10% fitted Poisson distributions. Considered across trap sites (overall habitat), variance in catch success increased proportionally faster than the mean (i.e. Taylor's Power Law, variance = 0.54 mean 1.764). 3. We compared the confidence intervals for the expected cumulative catch in randomly drawn sequential samples from a frequency distribution representing the overall habitat (based on the parameters for Taylor's power law) and the frequency distribution of expected cumulative catch within each individual catch site [via randomisation based on the mean and negative binomial exponent (k)]. 4. In all cases and across all sample sizes, median values for the power to differentiate habitat and catch sites never exceeded 0.2, suggesting that principles involved in optimal foraging, if operating, must be accompanied by a very high degree of uncertainty. 5. Under conditions of high resource variability, many days must be spent in a single catch site if movement decisions are based on an ability to differentiate current catch site from overall habitat. Empirical evidence suggests this is never met. This may explain why proximal mechanisms that illicit quickly resolved behavioural responses have been more successful in describing web relocation patterns than those associated with optimal foraging.

Creativity in the Cane Fields: Motivating and Engaging IT Students Through Games

C. Lemmon, N.J. Bidwell, M. Hooper, C. Gaskett, J. Holdsworth, and P. MusumeciProceedings of the 2nd Annual Microsoft Academic Days Conference on Game Development, (aboard Disney Wonder Cruise Ship, 2007)

Foveated Vision Systems with Two Cameras per Eye

Ales Ude, Chris Gaskett, and Gordon ChengProceedings of the IEEE/RSJ IEEE Int. Conf. Robotics and Automation (ICRA 2006), (Orlando, Florida, May 2006)

We present an exhaustive analysis of the relationship between the positions of the observed point in the foveal and peripheral view with respect to the intrinsic and extrinsic parameters of both cameras and 3-D point position.

Hand-Eye Coordination through Endpoint Closed-Loop and Learned Endpoint Open-Loop Visual Servo Control

Chris Gaskett, Ales Ude, and Gordon Cheng

Chris Gaskett, Ales Ude, and Gordon Cheng

The International Journal of Humanoid Robotics, Vol 2, No 2, 2005 pp. 203-224

We propose a hand-eye coordination system for a humanoid robot that supports bimanual reaching. The system combines endpoint closed-loop and open-loop visual servo control. The closed-loop component moves the eyes, head, arms, and torso, based on the position of the target and the robot's hands, as seen by the robot's head-mounted cameras. The open-loop component uses a motor-motor mapping that is learnt online to support movement when visual cues are not available.

Support Vector Machines and Gabor Kernels for Object Recognition on a Humanoid With Active Foveated Vision

Ales Ude, Chris Gaskett, and Gordon ChengProceedings of the IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS 2004), (Sendai, Japan, September 2004)

Online Learning of a Motor Map for Humanoid Robot Reaching

Chris Gaskett and Gordon Cheng

Chris Gaskett and Gordon Cheng

Proceedings of the 2nd International Conference on Computational Intelligence, Robotics and Autonomous Systems (CIRAS 2003), (Singapore, December 2003)

Reinforcement Learning Under Circumstances Beyond its Control

Chris Gaskett

Chris Gaskett

Proceedings of the International Conference on Computational Intelligence for Modelling Control and Automation (CIMCA2003), (Vienna, Austria, February 2003)

This paper describes an RL algorithm that uses an idea from decision theory.

Q-Learning for Robot Control

Chris Gaskett

Chris Gaskett

PhD thesis from the Robotic Systems Laboratory, part of the Research School of Information Sciences and Engineering at the Australian National University.

As well as more detailed versions of the material below, the thesis includes a partial solution to the rising value problem identified by Thrun and Schwartz (1993). It also contains a longer, more up to date survey of other continuous state and action reinforcement learning algorithms than the papers.

Learning Implicit Models during Target Pursuit

Chris Gaskett, Peter Brown, Gordon Cheng and Alex Zelinsky

Chris Gaskett, Peter Brown, Gordon Cheng and Alex Zelinsky

Proceedings of the IEEE International Conference on Robotics and Automation (ICRA2003), (Taiwan, May 2003).

This paper demonstrates control of one joint of an active head and shows that the neural network has learnt a model of movement under gravity.

Reinforcement Learning for a Vision Based Mobile Robot

Chris Gaskett, Luke Fletcher, and Alex Zelinsky

Chris Gaskett, Luke Fletcher, and Alex Zelinsky

Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS2000) © IEEE (Takamatsu, Japan, October 2000).

This paper applied the algorithm to wandering and servoing for a mobile robot. It also demonstrated learning from other behaviours.

Reinforcement Learning for Visual Servoing of a Mobile Robot

Chris Gaskett, Luke Fletcher, and Alex Zelinsky

Chris Gaskett, Luke Fletcher, and Alex Zelinsky

Proceedings of the Australian Conference on Robotics and Automation (ACRA2000), (Melbourne Australia, August 2000)

This paper applied the algorithm to visual servoing of a mobile robot.

Q-Learning in Continuous State and Action Spaces

Chris Gaskett, David Wettergreen, and Alex ZelinskyProceedings of the 12th Australian Joint Conference on Artificial Intelligence © Springer-Verlag (Sydney Australia, 1999).

This paper demonstrated improved performance through advantage learning. It also contained a survey of continuous state and action q-learning methods and argued for the importance of off-policy learning. If you are interested in this paper, please also consider taking a look at my PhD thesis (above). The thesis is more up to date (better survey) and describes experiments on real robots, rather than just simulations.

Autonomous Control and Guidance for an Underwater Robotic Vehicle

David Wettergreen, Chris Gaskett, and Alex Zelinsky

David Wettergreen, Chris Gaskett, and Alex Zelinsky

Proceedings of the International Conference on Field and Service Robotics (FSR'99) (Pittsburgh, USA, September 1999)

Reinforcement Learning for a Visually-Guided Autonomous Underwater Vehicle

David Wettergreen, Chris Gaskett, and Alex ZelinskyProceedings of the International Symposium on Unmanned Untethered Submersibles Technology (UUST'99) (Durham, New Hampshire, USA, August 1999)

Reinforcement Learning applied to the control of an Autonomous Underwater Vehicle

Chris Gaskett, David Wettergreen, and Alex Zelinsky

Chris Gaskett, David Wettergreen, and Alex Zelinsky

Proceedings of Australian Conference on Robotics and Automation (Brisbane, Australia, March 1999).

This paper introduced the wire fitted neural network algorithm.

Development of a Visually-Guided Autonomous Underwater Vehicle

David Wettergreen, Chris Gaskett, and Alex ZelinskyProceedings of IEEE OCEANS'98 (Nice, France, September 1998)